FilmGate reached out last week to see if I was working on anything new. Outside of work, I haven’t been, but I told ‘em I had something cooking. At the very least, it’s time for me to make a new, better version of the energy ball.

In my last post, I asked if you believe in destiny. Well, today, after months of ignoring this project, I go on my GitHub and see a guy starred my EnergyBall V2 repo. I check out his profile and on the very first repo I click on, find this ↓ …HOW THE F#%K?!! I need to reach out and see if he’s down to jam. At the very least, I NEED that awesome lightning shader.

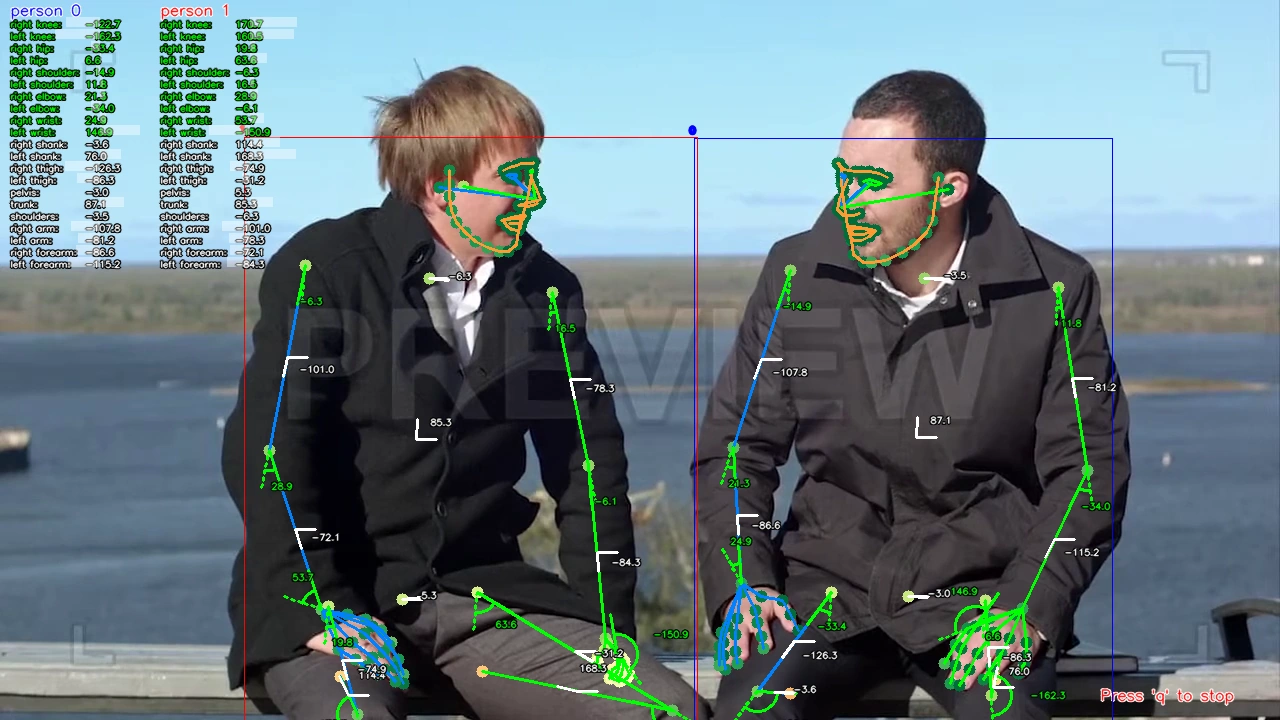

I’ve been wanting to move away from the Kinect and toward webcam-based tracking, but I think that just isn’t realistic yet. At the end of the day, simply having a live video overlay will be an upgrade. I should go for the lower-hanging fruit of just mounting a webcam on top of my Kinect and then displaying the Unity output above that camera feed, or the even lower-hanging fruit of just enabling the camera output from my Kinect in Unity. Either way, you know I had to give the Sports2D package a try.

I tried bringing my laptop over to my dad and getting the program to recognize two pairs of hands, but that didn’t seem to work. Was the package broken, or did I just not have enough lighting in my dad’s room for my crappy webcam?

I decided to be diligent and test run a stock video, and it correctly detected everything, which means that I just need to get a better camera. I have a Sony A5000 mirrorless I can stream to my PC, but the setup is cumbersome.

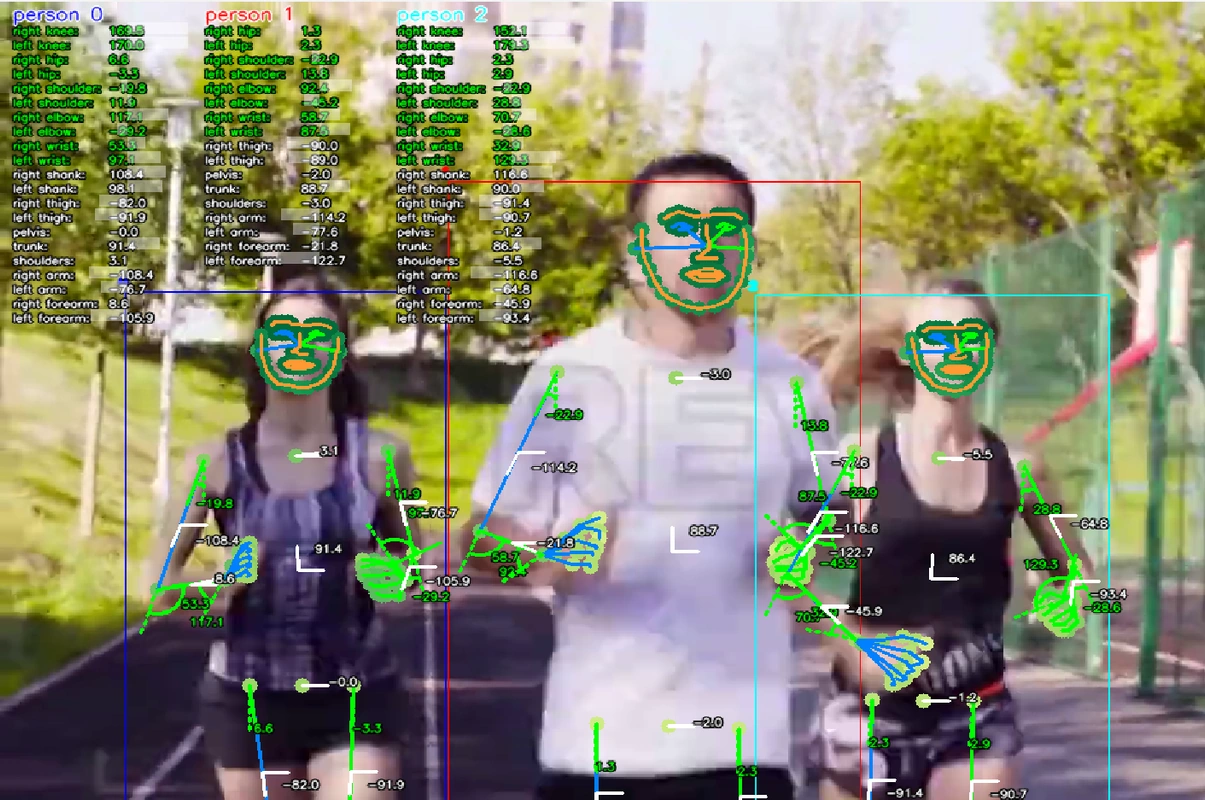

I gave it a trickier test (more motion and a third person), and it still tracked fine.

All this to say, hand tracking works well, but how easily will I be able to sync the data to my Unity scene? This is what I have to explore next.