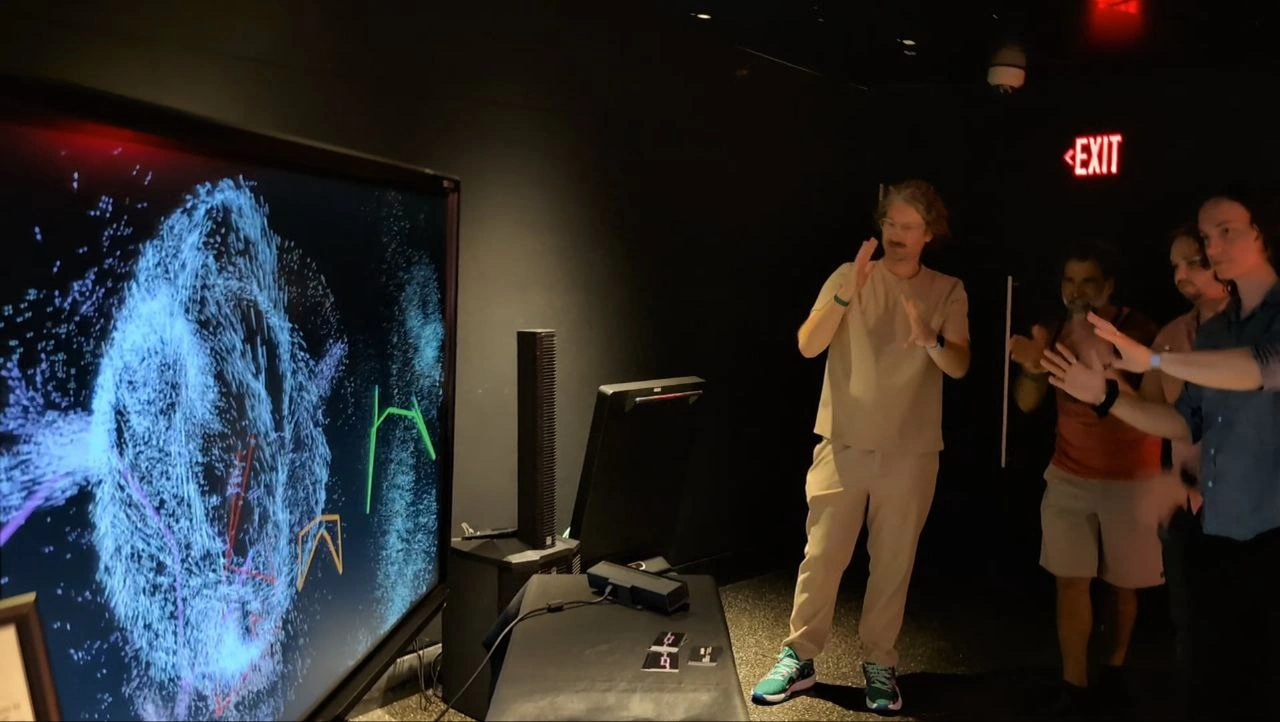

The event last night was a huge success! From a presentation standpoint at least. On the technical side, there were some bugs, and thankfully the naivety of the experience saved the users from noticing them. Before I delve into the details, though, I’ll give myself a huge pat on the back and paste some footage in here:

Anyhow…

In my quest to add animations to the hand states, I broke the hand state tracking for actual Kinect players and had no idea until I set up my project in the museum. This underscores a very important lesson, which is that no matter how much I trust my dummy player, I always need to test on the Kinect before assuming a feature works. Thankfully, I had tested hand tracking on the Kinect the previous night, so to fix the issue I just did a git checkout of my 8/14 commit. I lost the attraction radius scaling on hand state changes, but like I said, new users don’t miss what they’ve never had.

Secondly, there was an important piece of code I forgot to implement, and an important keyboard command I forgot to document. In my V1 project, I had a simple function call in the Update() loop that allowed me to use my left and right mouse buttons to either do a soft or hard reset of the tracked bodies. It would have been as simple as copying and pasting in a few lines of code, but by the time I remembered, people were already playing with their energy balls.

Because I was outputting my laptop screen to a large flatscreen TV, it would have benefited me to update the code on my laptop screen while playing the experience on the TV. Unfortunately, I couldn’t remember the keyboard shortcut to turn a fullscreen window into a windowed window, so I couldn’t drag the experience to the other screen. In the heat of the situation, I also couldn’t conjure the correct dialect to ask ChatGPT what the command was. Today, with the pressure off, I found the words.

Alt+Enter. Alt+Enter. I’ve now written it down, and I intend to memorize it.

The last (and biggest) bummer in my opinion was not being able to get the Kinect Sensor working on my Asus Predator laptop with its 4080 GPU. I had to settle for using my tried and tested (and worn out) Aurus with its 3080. The drop in frame rate was noticeable to me, but once again………

It turns out that installing the newest version of the Kinect SDK for Windows doesn’t actually install the newest Kinect drivers. I had to go to Windows Update to get the correct ones installed, and once I did that, the Kinect worked. Easy fix. What wouldn’t I have given for just one more hour of setup before the event!

Well, it took over an hour, but I fixed the particle animations not playing on hand state changes. I spent a long time scratching my head trying to figure out why the player’s previous hand states seemed to always match their current hand states even when I set them after code execution. I eventually realized that I was completely overcomplicating the matter. All I needed to do was create a bool in the playerConstructor to keep track of the hand states. Simple as that.

Not even using previous hand states anymore lolz

With this taken care of, I can rest easy, knowing that next time I set this thing up, there won’t be unexpected bugs. However, because I still need to implement single open hand state behavior, there’s lots more time for me to break things :)

Tags: gamedev kinect unity debugging vfx animation events footage