My priority today is setting up player integration with the Azure Kinect, and then with the Kinect V2 as a fallback. Compared to the V2, the Azure introduces some additional joints for the face and clavicles. Unfortunately, the hands are the same, but I already knew that.

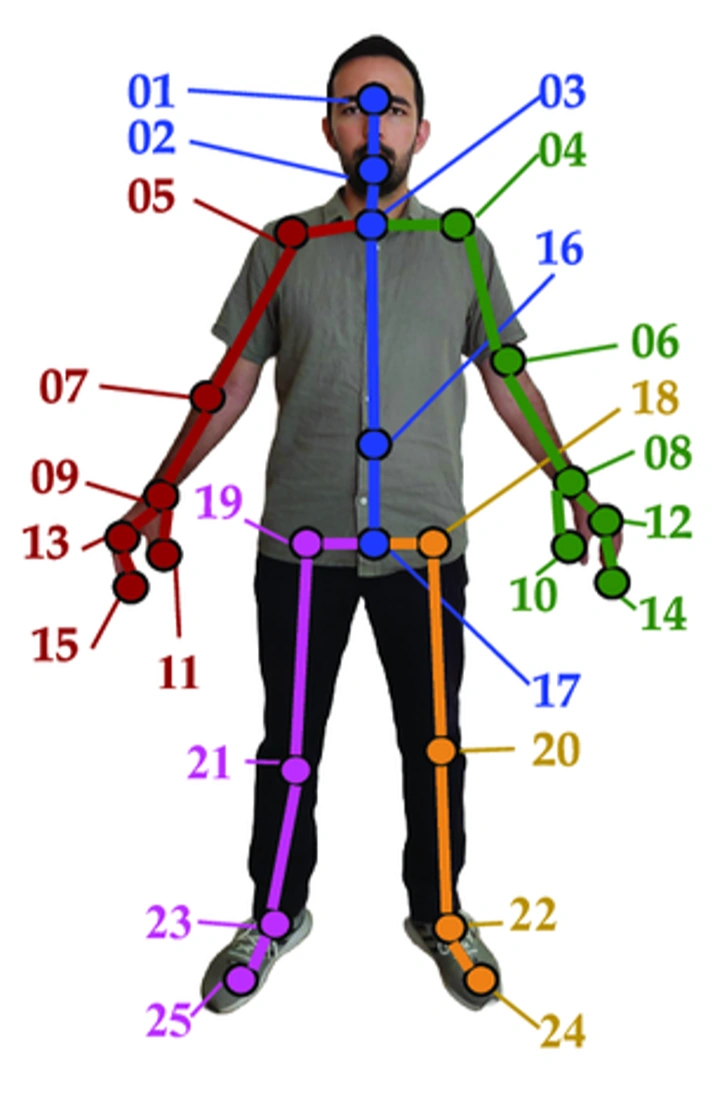

Kinect V2

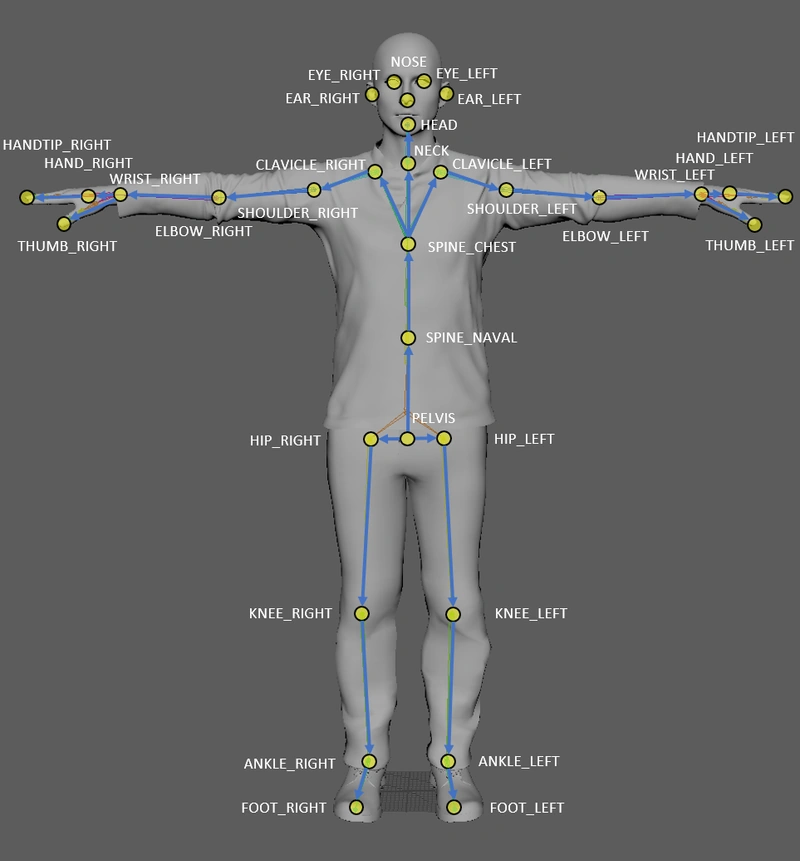

Azure Kinect

In my original project, I use a simple BodySourceManager script to track when new players are added, updated, and removed in the scene. The Azure Kinect Sample pack comes with a far more robust Kinect Manager script, which I intend to tone down for my project’s purposes. I’ve already done this before with that dev’s Kinect V2 Sample pack for my Inherit Avatar project.

I thought it might be a nice visual feature to show just the user’s hands in the scene. The UserMeshDemo scene draws a bounding box around the user (KinectManager.GetUserBoundingBox) by comparing the min/max left/right positions of all joints. I created a new function, GetUserRegionBoundingBox, to grab just the left and right hand bounding boxes.

So it turns out GetUserBoundingBox doesn’t actually change the area used to mask the user in the scene. I didn’t know this until I fixed several bugs in the demo that were causing the function to never even run (that should have been enough of a clue, honestly). I also didn’t notice that there were already two built-in functions for getting the bounding boxes around the hands. Ugh.

Look at me! Half man, half stick. This is progress, and this progress is due to my discovery of the Cubeman prefab object within the KinectAvatarsDemo1 scene. I’m very happy to see that my point cloud mesh perfectly overlaps with the skeleton. I called my mom over and confirmed this setup works with multiple users.

Something I hadn’t thought of until now is the possibility of using collision detection between users and player bodies. I wonder how feasible it would be to displace a body on impact.

I also played with gesture recognition for the first time and am pleasantly impressed with its quickness and accuracy. This will help me gamify the experience more easily. Speaking of gamification, another idea I have is to use the Azure’s eye tracking capabilities to trigger an energy charging animation when a user closes their eyes. Ideas are flowing, but I need to be coding!